Introduction

Large Language Models (LLMs) are reshaping how we interact with technology, from drafting emails to answering life-changing medical queries. But do we always trust the answer given by LLMs? Small oversights in emails may be fixed timely, however, inaccurate descriptions in a medical query response may lead to serious repercussions.

The catch is straightforward: power comes with responsibility. While LLMs are capable of impressive feats, they are also prone to errors, biases, and unanticipated behaviors. Testing is therefore crucial to ensure that LLMs produce accurate, fair, and useful outputs in real-world applications.

This blog explores why LLM evaluation is essential, provides a deep dive into metrics and methods for testing LLMs. By the end, you will have a clearer understanding of why testing isn’t just a hindsight—it’s the backbone of building better, more reliable AI.

Why is LLM Testing Required?

We start with elaborating the potential benefits of thorough LLM testing, together with a related concrete application scenario.

Ensure Model Accuracy

Despite being trained on massive amounts of data, LLMs can still make inaccurate responses, ranging from generating nonsensical outputs to producing factually incorrect information. Testing plays a crucial role in identifying these inaccuracies and ensuring that the model produces reliable, factually correct outputs.

Example: In critical applications like healthcare consulting, responses must be delicate. A model error could lead to incorrect medical advice or even harm to patients.

Detecting and Mitigating Bias

LLMs inherit biases present in their training data, such as gender, racial, or cultural biases. This can lead to discrimination or reinforce harmful stereotypes. Rigorous testing helps identify these biases, enabling developers to mitigate them and ensure fairness in the model’s behavior.

Example: In recruitment tools, LLMs are used to help evaluate resumes and job applications. If a model exhibits gender or racial bias, it could inadvertently favor or reject candidates based on characteristics unrelated to their qualifications.

Improving Robustness and Generalization

LLMs are often evaluated on their performance in ideal conditions, but real-world inputs can be much more varied and unpredictable. Robustness testing ensures that a model performs well even under edge cases or adversarial conditions.

Example: Consider a customer support chatbot designed for multi-lingual purpose. If the model is only trained with datasets mainly in English and lacks generalization ability for other languages or dialects, it may fail to respond appropriately in non-English conversations.

Optimizing User Experience

Testing LLMs from a user experience (UX) perspective is vital for understanding how the model interacts with users. A well-tested model will generate outputs that are coherent, relevant, and engaging, enhancing user satisfaction and promoting retention.

Example: All applications based on in-context responses.

Regulatory and Ethical Compliance

For industrial AI applications, ensuring that they comply with ethical guidelines and regulations is crucial. Testing on this issue helps ensure that LLMs do not violate privacy regulations and adhere to ethical standards.

Example: Personalized content recommendation systems may use user data to provide tailored experiences. Many regions, such as the European Union, have implemented data protection regulations like GDPR, which govern how personal data is used.

LLM Evaluation Metrics and Methods

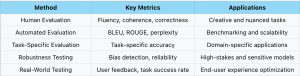

Now we explore common evaluation methods, the key metrics for each, and the scenarios in which they are most effective. Below is the roadmap of this section.

Human Evaluation

Human evaluation involves assessing LLM outputs by users or experts. This approach is particularly useful for creative tasks where subjective judgment matters; and tasks requiring professional backgrounds and only domain experts have the authority to give a reliable evaluation. Human evaluators can rate the fluency, coherence, and correctness of the generated text, helping to assess if the model meets human standards for quality.

Applications: Creative content generation, design, scientific domain, etc.

Automated Evaluation

Automated evaluation uses computational metrics to assess the quality of LLM outputs. These metrics are often used for benchmarking models on standardized tasks, therefore mostly appear in research papers. Automated metrics follow certain mathematical definitions and can quickly process large volumes of data, making them ideal for testing models at scale. Many metrics have been adopted for NLP tasks such as BLEU score in machine translation. We introduce several metrics that have been widely used for evaluation on NLP tasks.

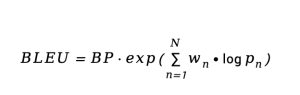

- BLEU (Bilingual Evaluation Understudy)

BLEU compares the n-grams of the generated translation with a reference translation, assigning a score between 0 and 1. Higher BLEU scores indicate that the generated text is closer to the reference.

where BP is the brevity penalty, and is the precision of n-grams.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation)

ROUGE is a set of metrics used for evaluating text summarization and other tasks where generating a relevant summary is the goal. It focuses on recall, measuring how many n-grams in the generated text overlap with the reference. A higher Rouge score shows better performance.

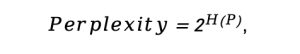

- Perplexity

Perplexity measures how well a probabilistic model predicts a sample. It is commonly used for evaluating language models, indicating how effectively the model predicts the next word/token in a sequence. A lower perplexity value indicates that the model is better at predicting the next token. It’s often used as a proxy for fluency and coherence.

where H (p) is the cross-entropy of the model’s predicted distribution.

Application: machine translation, summarization, question-answering systems, etc.

Task-Specific Evaluation

LLMs have shown potentials on more and more challenging tasks, for which particular metrics should be proposed to evaluate the performance thoroughly. These metrics are directly tied to the model’s ability to solve complicated real-world problems. For example, to evaluate scientific question-answering performance of LLMs, recent research proposed a metric called RACAR, leveraging the power of GPT-4. This metric consists of five dimensions: Relevance, Agnosticism, Completeness, Accuracy and Reasonableness. Indeed, using GPT-4 to design evaluation metrics is happening!

Applications: education, scientific domains, medical diagnosis, legal document analysis.

Robustness Testing

Robustness testing ensures that LLMs can handle edge cases, adversarial inputs, and unexpected conditions. For sensitive applications, this type of testing is critical to avoid disastrous consequences. It also includes bias detection to ensure the model doesn’t make discriminatory decisions based on problematic inputs. Concrete assessment may include: adversarial attack test, fairness test, out-of-distribution test, perturbation test, etc.

Applications: autonomous vehicle, healthcare, high-risk applications

Real-World Testing

Real-world testing involves collecting feedback from terminals (e.g. users) and observing how they interact with the model in practical scenarios. Metrics like user satisfaction, task success, and engagement help developers understand how well the model meets user needs. If the LLMs target human users, then this method is closely entangled with human evaluation. On the other hand, after the LLM system is deployed, the testing should also include system stability, inference latency, resource consumption, etc.

Best Practices and Case Studies

Several companies have successfully improved their LLM performance through extensive testing. We give an example of Claude, an LLM developed by Anthropic, which has improved the performance in the new generation by robustness testing.

In the early stages, Claude 1 showed significant weaknesses in handling adversarial inputs, such as biased queries and subtle misleading prompts. It also struggled with complex, multi-step reasoning tasks. Anthropic conducted extensive robustness testing focusing on bias detection, safety, and accuracy under ambiguity. They tested Claude’s performance using adversarial inputs, ethical dilemma questions, and complex logical problems.

Anthropic fine-tuned Claude 2 using more robust, curated datasets focused on reducing bias and improving long-term reasoning. Consequently, Claude 2 shows a substantial improvement in safety (less likely to produce biased or harmful responses) and logical coherence when handling multi-step or complex reasoning tasks. It also demonstrated better resilience to adversarial inputs, providing more accurate, contextually appropriate answers even when the input was ambiguous or adversarial.

Popular Tools for Testing LLMs

Several tools have emerged to support LLM testing. One notable tool is WhaleFlux.

Other popular LLM testing tools include:

- Hugging Face’s transformers library: Provides easy access to a range of pre-trained models and benchmarking datasets.

- OpenAI’s GPT Evaluation Framework: Allows developers to assess GPT models on various metrics like task completion, creativity, and coherence.

Conclusion

Testing LLMs is an essential step in ensuring that models are accurate, fair, robust, and optimized for user experience. From human evaluations to real-world user feedback, a comprehensive testing strategy is key to addressing the challenges posed by LLMs in practical applications.

We encourage developers to adopt these strategies in their LLM development and testing pipelines, and to continuously iterate on their models based on feedback and testing results. Share your experiences and feedback to contribute to the ongoing improvement of LLMs!